Urgent Info

- There is no Urgent Info now.

GSIC

| Addr. | 2-12-1 O-okayama, Meguroku, Tokyo 152-8550 JAPAN |

| Contact this mail address. |

You are here

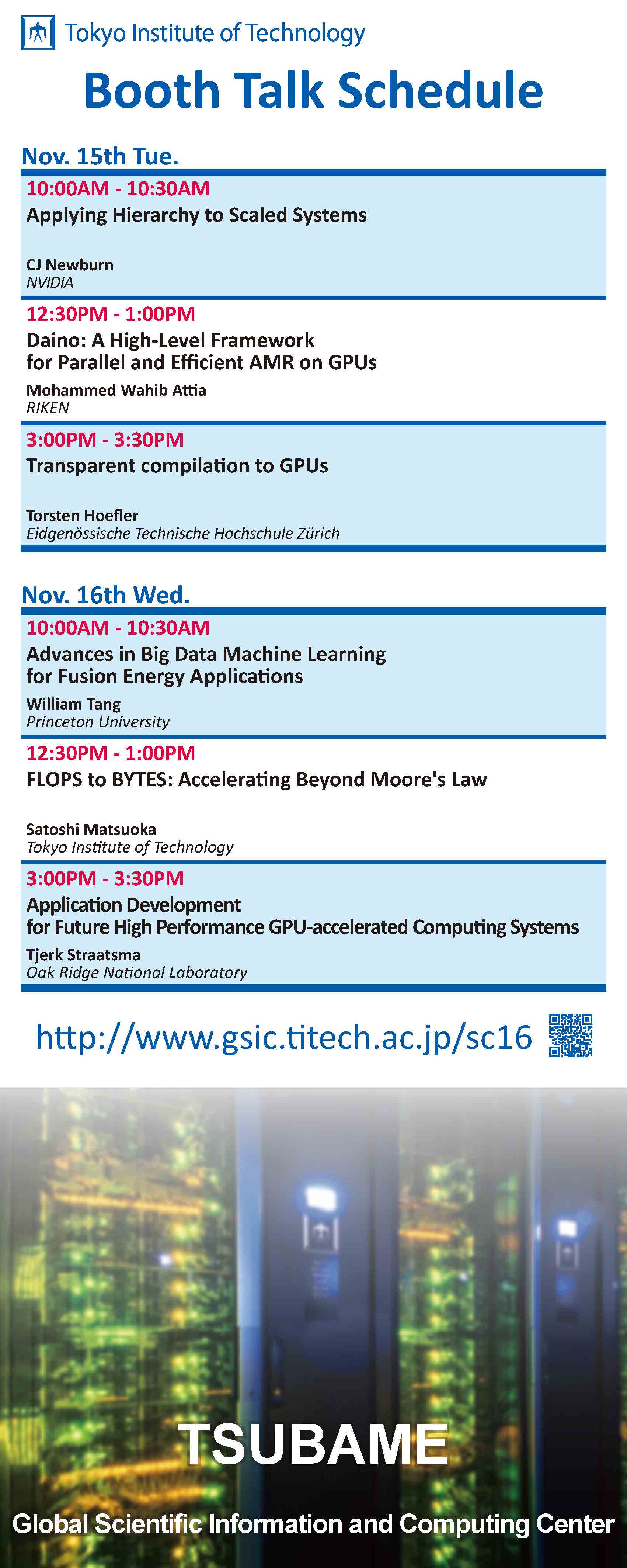

Booth Talk Schedule

| SC16 TOP | News Release | Presentations | Booth Talk Schedule | Booth Posters | Booth Map |

| Title | Transparent compilation to GPUs |

| Speaker | Torsten Hoefler |

| Abstract | Programming today’s increasingly complex heterogeneous hardware is difficult, as it commonly requires the use of data-parallel languages, pragma annotations, specialized libraries, or DSL compilers. Adding explicit accelerator support into a larger code base is not only costly, but also introduces additional complexity that hinders long-term maintenance. We propose a new heterogeneous compiler that brings us closer to the dream of automatic accelerator mapping. Starting from a sequential compiler IR, we automatically generate a hybrid executable that - in combination with a new data management system - transparently offloads suitable code regions. Our approach is almost regression free for a wide range of applications while improving a range of compute kernels as well as two full SPEC CPU applications. We expect our work to reduce the initial cost of accelerator usage and to free developer time to investigate algorithmic changes. |

| Title | Application Development for Future High Performance GPU-accelerated Computing Systems |

| Speaker | Tjerk Straatsma |

| Abstract | The Accelerated Data Analytics and Computing (ADAC) Institute is a consortium of computer centers working together toward common objectives, sharing expertise and identifying promising approaches for future high performance computing architectures. The founding institutions, the Oak Ridge Leadership Computing Facility (OLCF) at ORNL, the Global Scientific Information and Computing Center at Tokyo Institute of Technology, and the Swiss National Supercomputing Center (CSCS) at the Swiss Federal Institute of Technology (ETH, Zürich), are collaborating on common challenges in applications, resource management and performance for the heterogeneous supercomputers managed by the three centers. This presentation will focus on the application development and readiness work ongoing at the OLCF in the context of ADAC, and in support of delivering fundamental, GPU-accelerated capabilities to the computational scientific user community. |

| Title | Daino: A High-Level Framework for Parallel and Efficient AMR on GPUs |

| Speaker | Mohammed Wahib Attia |

| Abstract | Adaptive Mesh Refinement methods reduce computational requirements of problems by increasing resolution for only areas of interest. However, in practice, efficient AMR implementations are difficult considering that the mesh hierarchy management must be optimized for the underlying hardware. Architecture complexity of GPUs can render efficient AMR to be particularity challenging in GPU-accelerated supercomputers. This paper presents a compiler-based high-level framework that can automatically transform serial uniform mesh code annotated by the user into parallel adaptive mesh code optimized for GPU-accelerated supercomputers. We also present a method for empirical analysis of a uniform mesh to project an upper-bound on achievable speedup of a GPU-optimized AMR code. We show experimental results on three production applications. The speedups of code generated by our framework are comparable to hand-written AMR code while achieving good and weak scaling up to 1000 GPUs. |

| Title | Advances in Big Data Machine Learning for Fusion Energy Applications |

| Speaker | William Tang |

| Abstract | Building the scientific foundations needed to develop fusion power in a timely way can be facilitated not only by familiar “hypothesis-driven”/ first principles approaches but also by engaging modern big-data-driven statistical methods featuring machine learning (ML) -- an exciting R&D approach that is increasingly deployed in many scientific and industrial domains. The recent growth and success of machine learning in industry (e.g. Google, the new Internet services economy, etc.) suggests that these techniques can be formulated and adapted to enable new avenues of data-driven discovery in key scientific applications areas such as Fusion Energy with exciting potential for accelerating scientific knowledge discovery. An especially time-urgent and very challenging problem facing the development of a fusion energy reactor today is the need to deal reliably with large-scale major disruptions in magnetically-confined tokamak systems such as the EUROfusion Joint European Torus (JET) today and the burning plasma ITER device in the near future. Significantly improved methods of prediction with better than 95% predictive capability are required to provide sufficient advanced warning for disruption avoidance or mitigation strategies to be effectively applied before critical damage can be done to ITER -- a ground-breaking $25B international burning plasma experiment with the potential capability to exceed “breakeven” fusion power by a factor of 10 or more. This truly formidable task in Fusion Energy Science (FES) demands accuracy beyond the near-term reach of hypothesis-driven /”first-principles” simulations that dominate current research and development in the field. Statistical ML methods trained on very large data sets hold significant promise for delivering the much-needed FES predictive tools that can be generalizable at the basic level to multiple application domains. |

| Title | Applying Hierarchy to Scaled Systems |

| Speaker | CJ Newburn |

| Abstract | Many applications involve a high degree of communication; the speedups they can achieve on HW platforms is limited by how well those platforms support strong scaling. Even applications which exhibit significant phases of independence often need to communicate periodically, and the efficiency of weaker scaling hinges on how well tuners manage locality and communication. In this talk, we propose topologies that enable greater scaling, and highlight tasking framework and programming model features needed to enable a combination of strong and weak scaling. The hierarchical system design is supported by a combination of features that enable universal load/store access to distributed memories, and that can manage the scope of synchronization. These are matched with programming model features that ease access to distributed memory but that can minimize the scope of synchronization. Finally, we provide an evaluation of some workloads from high performance computing and deep learning that illustrate the benefits of such hardware and programming model support. |

| Title | FLOPS to BYTES: Accelerating Beyond Moore's Law |

| Speaker | Satoshi Matsuoka |

| Abstract | The so-called “Moore’s Law”, by which the performance of the processors will increase exponentially by factor of 4 every 3 years or so, is slated to be ending in 10-15 year timeframe due to the lithography of VLSIs reaching its limits around that time, and combined with other physical factors. This is largely due to the transistor power becoming largely constant, and as a result, means to sustain continuous performance increase must be sought otherwise than increasing the clock rate or the number of floating point units in the chips, i.e., increase in the FLOPS. The promising new parameter in place of the transistor count is the perceived increase in the capacity and bandwidth of > > storage, driven by device, architectural, as well as packaging innovations: DRAM-alternative Non-Volatile Memory (NVM) devices, 3-D memory and logic stacking evolving from VIAs to direct silicone stacking, as well as next-generation terabit optics and networks. The overall effect of this is that, the trend to increase the computational intensity as advocated today will no longer result in performance increase, but rather, exploiting the memory and bandwidth capacities will instead be the right methodology. However, such shift in compute-vs-data tradeoffs would not exactly be return to the old vector days, since other physical factors such as latency will not change when spatial communication is involved > > in X-Y directions. Such conversion of performance metrics from FLOPS to BYTES could lead to disruptive alterations on how the computing system, both hardware and software, would be evolving towards the future. |

![[GSIC] Tokyo Institute of Technology | Global Scientific Information and Computing Center](/_img/logo_en.jpg)